Provisioning complex cloud architectures can be as simple as executing a single command. However, it can be challenging to fully automate the infrastructure without performing some manual configuration after the resources are provisioned. Some services like Zookeeper require information about other machines in the architecture in order to cluster properly. Is it possible to fully automate the creation and configuration of a Zookeeper cluster to be completely up and running when the provisioning process is complete? In this article, we will show you how to do this with AWS and Ansible.

In this blog, we’ll discuss three options for creating and configuring Zookeeper instances:

Scripted Zookeeper node discovery

Zookeeper node management with Exhibitor

Stateless configuration-driven Zookeeper nodes

Option 1: Scripted Zookeeper Node Discovery

One approach is to create an auto scaling group in AWS, store the launch configurations for each Zookeeper instance in S3, and write a script to inject it later.

This option requires custom scripting, which can be hard to maintain, error-prone, time-consuming, and can lead to non-deterministic results. For example, as the Zookeeper instances go live in the auto scaling group, they perform a discovery to determine their IDs. The server IDs should be unique for Zookeeper to cluster properly. There is a high possibility that two Zookeeper instances will have the same server ID if they go live at the same time (which we saw happen in a few tests we ran). Furthermore, since we do not know the IP addresses of the instances before they get allocated, we need to clean the configuration file if an instance goes down and another goes up in its place. Otherwise the Zookeeper cluster will fail. Automating the cleanup process requires more scripting!

Option 2: Zookeeper Node Management With Exhibitor

Another approach is to use an auto-discovery tool like Exhibitor to automatically discover and configure Zookeeper for you.

Such an approach is interesting, but adds new dependencies and infrastructure that need to be managed. This is not a bad answer, but not the focus of this article.

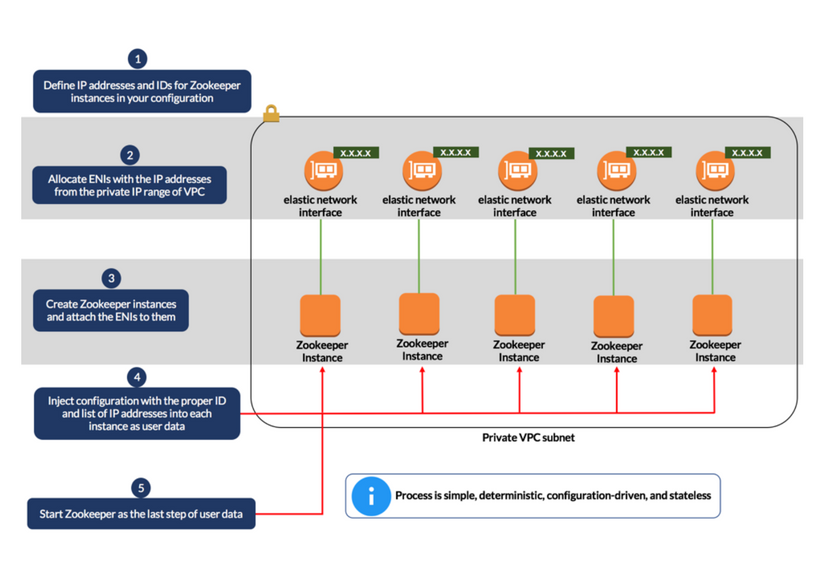

Option 3: Stateless Configuration-Driven Zookeeper Nodes

A third approach, which we used on a current project, is to configure the Zookeeper instances in AWS to be aware of each other automatically.

Instead of creating an auto scaling group and determining the IP addresses of the Zookeeper instances as they spin up, we used AWS Elastic Network Interface (ENI) to reserve IP addresses for the Zookeeper instances and attach each ENI to an instance. We stated the IP addresses in the configuration since they are within the private IP range of our virtual private cloud (VPC). If we were using an auto scaling group, this wouldn’t be feasible since we wouldn’t be in control of the IP addresses. Furthermore, each instance had its unique ID pre-assigned. Using this approach, we were able to fully automate the creation and configuration of our Zookeeper cluster.

The following steps demonstrate how this option can be implemented in Ansible.

Step 1: State Your Zookeeper Configuration

In the vars.yml file, create the following variables to set the IP addresses and IDs of your Zookeeper instances, where zk_ips represents a list of IP addresses you choose from the private IP range of your VPC and zk_ids represents a list of unique server IDs:

# Zookeeper private IP addresses zk_ips: [ “10.0.x.x”, “10.0.x.x”, “10.0.x.x”, “10.0.x.x”, “10.0.x.x” ]

Zookeeper server IDs

zk_ids: [ “1”, “2”, “3”, “4”, “5” ]

Step 2: Create the ENIs

In this step, create the ENIs and associate the IP addresses of the Zookeeper instances to them. The ENIs allow you to reserve IP addresses for the Zookeeper instances. If an instance goes down, you can either restart the instance, or de-attach the ENI and reattach it to a new instance. There is no need to update the configuration of the Zookeeper instances, which saves time and eliminates the possibility of cluster failure.

- name: Create Zookeeper ENIs ec2_eni: region: "{{ aws_region }}" device_index: 1 private_ip_address: "{{ item }}" subnet_id: "{{ private_subnet_id }}" state: present security_groups:

"{{ private_sg_id }}"

"{{ zk_sg_id }}"

"{{ zk_ext_sg_id }}" with_items: "{{zk_ips}}" register: eni_zk

Step 3: Create and Configure the Zookeeper Instances

Now attach the ENIs to the Zookeeper instances and run a bash script during startup. The bash script under user_data is responsible for installing Zookeeper and configuring the Zookeeper instances.

- name: Create and Configure Zookeeper instances ec2: key_name: "{{ key_name }}" region: "{{ aws_region }}" instance_type: t2.micro image: "{{ amazon_linux_ami }}" wait: yes count: 1 network_interfaces: "{{ item.0.interface.id }}" instance_tags: Name: "{{ zk_name }}" user_data: | #!/bin/bash sudo yum -y update cd ~ sudo wget http://apache.mirrors.lucidnetworks.net/zookeeper/zookeeper-{{version}}/zookeeper-{{version}}.tar.gz sudo tar -xf zookeeper-{{version}}.tar.gz -C /opt/ cd /opt sudo mv zookeeper-* zookeeper cd /opt/zookeeper/conf echo '# This file has been generated automatically at boot' >> zoo.cfg echo 'tickTime=2000' >> zoo.cfg echo 'dataDir=/home/ec2-user/zookeeper' >> zoo.cfg echo 'clientPort=2181' >> zoo.cfg echo 'initLimit=5' >> zoo.cfg echo 'syncLimit=2' >> zoo.cfg echo 'server.{{zk_id[0]}}={{zk_ips[0]}}:2888:3888' >> zoo.cfg echo 'server.{{zk_id[1]}}={{zk_ips[1]}}:2888:3888' >> zoo.cfg echo 'server.{{zk_id[2]}}={{zk_ips[2]}}:2888:3888' >> zoo.cfg echo 'server.{{zk_id[3]}}={{zk_ips[3]}}:2888:3888' >> zoo.cfg echo 'server.{{zk_id[4]}}={{zk_ips[4]}}:2888:3888' >> zoo.cfg sudo mkdir /home/ec2-user/zookeeper sudo touch /home/ec2-user/zookeeper/myid sudo sh -c 'echo {{item.1}} >> /home/ec2-user/zookeeper/myid' cd /home/ec2-user/zookeeper sudo chown ec2-user:ec2-user myid sudo /opt/zookeeper/bin/zkServer.sh start with_together:

"{{ eni_zk.results }}"

"{{ zk_id }}" register: zk_instances

This is completely configuration driven and adds no new dependencies. However, it does lack high-availability in the event that multiple Zookeeper instances go down. We would have to restart any Zookeeper instances that went down. The good news is that once a Zookeeper instance comes back up it will rejoin the cluster. There is no need to perform any cleanup of the configuration file since the instances will come back up with the same IP addresses and IDs.

Need help?

Do you need help automating the creation and configuration of your cloud architecture? Credera has extensive experience in cloud infrastructure automation. We would love to discuss potential cloud and infrastructure automation solutions with you—contact us at marketing@credera.com.

Contact Us

Let's talk!

We're ready to help turn your biggest challenges into your biggest advantages.

Searching for a new career?

View job openings